Bert模型MRPC任务从本地到云端部署踩坑记录

1.项目的最开始(踩坑)**

研究路线:macanv/BERT-BiLSTM-CRF-NER:NER 任务的张量流解决方案 使用 BiLSTM-CRF 模型与 Google BERT 微调和私有服务器服务 (github.com)

Paper with code网站,查找部署数据集的方法

有一篇London University的论文可供参考

使用conda创建新环境

conda create –name BERT python=3.8

Cd D:\code\BERT-BiLSTM-CRF-NER-master

D:python setup.py install

尝试输入:bert-base-ner-train -help

结果报错:

2023-02-08 23:49:08.886024: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library ‘cudart64_101.dll’; dlerror: cudart64_101.dll not found

2023-02-08 23:49:08.886177: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

将*CUDART64_101.DLL**放到我们的C:\Windows\System32中即可。然后第一个报错解决了。*

*这时又有了第二个错误:*

ModuleNotFoundError: No module named ‘google.protobuf’

参考(3条消息) ModuleNotFoundError: No module named google.protobuf 解决方法_就是叫这个名字的博客-CSDN博客

使用pip install protobuf

继续报错

TypeError: Descriptors cannot not be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

\1. Downgrade the protobuf package to 3.20.x or lower.

\2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

pip uninstall protobuf

pip install protobuf==3.20.1

这时候又出现新报错了

ModuleNotFoundError: No module named ‘absl’

解决:(3条消息) ModuleNotFoundError: No module named ‘absl‘_糖尛果的博客-CSDN博客

pip install absl-py

报错:ModuleNotFoundError: No module named ‘wrapt’

参考:[(3条消息) No module named ‘wrapt‘错误解决方法_三角粽的博客-CSDN博客](https://blog.csdn.net/weixin_47594795/article/details/117284425?ops_request_misc={"request_id"%3A"167587238416800180646569"%2C"scm"%3A"20140713.130102334.."}&request_id=167587238416800180646569&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~all~sobaiduend~default-1-117284425-null-null.142^v73^insert_down3,201^v4^add_ask,239^v1^control&utm_term=ModuleNotFoundError: No module named wrapt&spm=1018.2226.3001.4187)

pip install wrapt

出现新报错:AttributeError: module ‘numpy’ has no attribute ‘object’.

解决方案:如下

ERROR: pip’s dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

tensorflow-gpu 2.2.0 requires astunparse==1.6.3, which is not installed.

tensorflow-gpu 2.2.0 requires gast==0.3.3, which is not installed.

tensorflow-gpu 2.2.0 requires google-pasta>=0.1.8, which is not installed.

tensorflow-gpu 2.2.0 requires grpcio>=1.8.6, which is not installed.

tensorflow-gpu 2.2.0 requires h5py<2.11.0,>=2.10.0, which is not installed.

tensorflow-gpu 2.2.0 requires keras-preprocessing>=1.1.0, which is not installed.

tensorflow-gpu 2.2.0 requires opt-einsum>=2.3.2, which is not installed.

tensorflow-gpu 2.2.0 requires scipy==1.4.1; python_version >= “3”, which is not installed.

tensorflow-gpu 2.2.0 requires tensorflow-gpu-estimator<2.3.0,>=2.2.0, which is not installed.

tensorboard 2.2.2 requires google-auth-oauthlib<0.5,>=0.4.1, which is not installed.

tensorboard 2.2.2 requires grpcio>=1.24.3, which is not installed.

tensorboard 2.2.2 requires markdown>=2.6.8, which is not installed.

tensorboard 2.2.2 requires requests<3,>=2.21.0, which is not installed.

tensorboard 2.2.2 requires tensorboard-plugin-wit>=1.6.0, which is not installed.

tensorboard 2.2.2 requires werkzeug>=0.11.15, which is not installed.

(2条消息) tensorflow和numpy 兼容版本_can903154417的博客-CSDN博客_tensorflow和numpy对应版本

pip intall numpy==1.19.5

tensorflow-gpu 2.2.0 requires h5py<2.11.0,>=2.10.0, which is not installed.

tensorflow-gpu 2.2.0 requires keras-preprocessing>=1.1.0, which is not installed.

tensorflow-gpu 2.2.0 requires opt-einsum>=2.3.2, which is not installed.

tensorflow-gpu 2.2.0 requires scipy==1.4.1; python_version >= “3”, which is not installed.

tensorflow-gpu 2.2.0 requires tensorflow-gpu-estimator<2.3.0,>=2.2.0, which is not installed.

2023-02-10 00:16:08.839501: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudart64_101.dll

Traceback (most recent call last):

File “C:\Users\14508.conda\envs\BERT\Scripts\bert-base-ner-train-script.py”, line 33, in

sys.exit(load_entry_point(‘bert-base==0.0.9’, ‘console_scripts’, ‘bert-base-ner-train’)())

File “C:\Users\14508.conda\envs\BERT\lib\site-packages\bert_base-0.0.9-py3.8.egg\bert_base\runs_init_.py”, line 29, in train_ner

from bert_base.train.bert_lstm_ner import train

File “C:\Users\14508.conda\envs\BERT\lib\site-packages\bert_base-0.0.9-py3.8.egg\bert_base\train\bert_lstm_ner.py”, line 24, in

from bert_base.bert import optimization

File “C:\Users\14508.conda\envs\BERT\lib\site-packages\bert_base-0.0.9-py3.8.egg\bert_base\bert\optimization.py”, line 84, in

class AdamWeightDecayOptimizer(tf.train.Optimizer):

AttributeError: module ‘tensorflow._api.v2.train’ has no attribute ‘Optimizer’

*解决方案:等待解决*

对于报错:AttributeError: module ‘tensorflow._api.v2.train’ has no attribute ‘Optimizer’

发现原来是TensorFlow版本装高了,于是

python - 导入 BERT 时出错:模块“tensorflow._api.v2.train”没有属性“优化器” - 堆栈溢出 (stackoverflow.com)

pip install tensorflow-gpu==1.15.0

发现py38无法安装这个TensorFlow-gpu==1.15.0

则采取“极端”做法—–卸载之前的环境并安装第二个cuda

*con**da remove* -n BERT –all

安装CUDA10以及CUDNN-7.4

然后等待其注入完成

下一步继续安装下去。

安装cuda成功以后我们还需要安装cudnn

进入网站CUDA 深度神经网络库 (cuDNN) | NVIDIA 开发者

对下载的cuDNN压缩包解压后出现如下三个文件夹子,

然后找到cuda的安装路径,我的安装路径如下:

E:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0

分别将cuDNN三个文件夹的内容分别复制到cuda对应的文件夹里面。

为cuDNN添加环境变量:

找到环境变量-系统变量-path,分别将如下三个变量添加进去,完成安装。

参考Cuda和cuDNN安装教程(超级详细)_jhsignal的博客-CSDN博客_cudnn安装

和Windows10检查Cuda和cuDNN是否安装成功?_jhsignal的博客-CSDN博客_检验cuda是否安装成功

对于出现的闪屏问题:——进入到cuda的安装路径,C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.1\extras\demo_suite,找到如下两个.exe文件:

首先执行:deviceQuery.exe,查看是否出现如下界面:这个过程中如若出现闪屏,那么我们可以这样做:先打开cmd,把exe文件拖进去,按住shift之后敲回车,就不会闪退了,实测有效。

然后执行bandwidthTest.exe,出现如下界面,则代代表cuDNN也安装成功。

关于如何安装多个版本的cuda参考这篇blog即可。

详细讲解如何在win10系统上安装多个版本的CUDA_Al Hg的博客-CSDN博客_cuda可以安装多个版本吗

具体而言其实就是说如果要使用cuda11.1那么把cuda10.0的禁用即可,也就是把环境变量给它换成其他的就行。

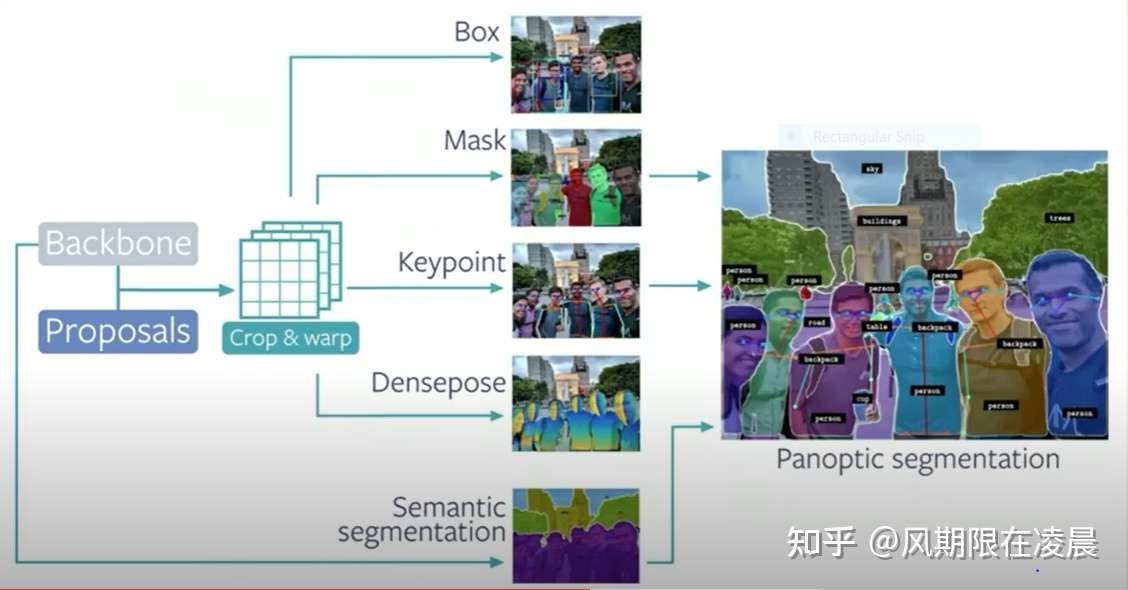

关于各种版本对照的问题,要参考这张图所示的内容。

因此我们安装python3.6即可。

conda create –name BERT python=3.6

Conda activate BERT

pip install tensorflow-gpu==1.15

安装这个pip install keras-applications>=1.0.8的时候发生了报错

那么我们需要做的就是

进入官网直接下载这个包即可

cd E:\AnacondaLib

(BERT) PS E:\AnacondaLib> pip install Keras_Applications-1.0.8-py3-none-any.whl

Tensorflow安装成功

下面我们参照github的文档说明python setup.py install

cd D:\code\BERT-BiLSTM-CRF-NER-master

然后python setup.py install

Using c:\users\14508.conda\envs\bert\lib\site-packages

Searching for numpy==1.19.5

Best match: numpy 1.19.5

Adding numpy 1.19.5 to easy-install.pth file

Installing f2py-script.py script to C:\Users\14508.conda\envs\BERT\Scripts

Installing f2py.exe script to C:\Users\14508.conda\envs\BERT\Scripts

Using c:\users\14508.conda\envs\bert\lib\site-packages

Finished processing dependencies for bert-base==0.0.9

代码依赖处理成功

下面我们继续安装github上面的说明进行下一步操作

可以使用 -help 查看训练命名实体识别模型的相关参数,其中必须指定data_dir、bert_config_file、output_dir、init_checkpoint vocab_file。

我们输入命令:bert-base-ner-train -help

这其中有这么一句话:

注意:“X”、“[CLS]”、“[SEP]”这三个是必需的,您只需将数据标签替换为此返回列表即可。

或者你可以使用最后的代码让程序自动从训练数据中获取标签

2.Bert常用的代码格式整理(仅供参考,存在错误,如需运行请修改)

python fc.py

-data_dir {your dataset dir}\

-output_dir {training output dir}\

-init_checkpoint {Google BERT model dir}\

-bert_config_file {bert_config.json under the Google BERT model dir} \

-vocab_file {vocab.txt under the Google BERT model dir}

python3 fc.py –train=false –test=true –input_size=1536 –num_units=128 –batch_size=8 –epoches=4 –learning_rate=0.0001

bert-base-serving-start \

-model_dir C:\workspace\python\BERT_Base\output\ner2 \

-bert_model_dir F:\chinese_L-12_H-768_A-12

-model_pb_dir C:\workspace\python\BERT_Base\model_pb_dir

-mode CLASS

-max_seq_len 202

bert-base-serving-start \

-model_dir C:\workspace\python\BERT_Base\output\ner2 \

-bert_model_dir F:\chinese_L-12_H-768_A-12

-model_pb_dir C:\workspace\python\BERT_Base\model_pb_dir

-mode CLASS

-max_seq_len 202

python bert_lstm_ner.py –task_name=”NER” –do_train=True –do_eval=True –do_predict=True –data_dir=NERdata –vocab_file=checkpoint/vocab.txt –bert_config_file=checkpoint/bert_config.json –init_checkpoint=checkpoint/bert_model.ckpt –max_seq_length=128 –train_batch_size=32 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=./output/result_dir/

成功运行run_clssifier.py

python run_classifier.py –task_name=MRPC –do_train=True –do_eval=True –data_dir=D:/code/BERT-open/GLUE/glue_data\MRPC –vocab_file=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/vocab.txt –bert_config_file=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/bert_config.json –init_checkpoint=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/bert_model.ckpt –max_seq_length=128 –train_batch_size=8 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=D:/code/BERT-open/GLUE/output

原来的

python run_classifier.py –task_name=MRPC –do_train=true –do_eval=true –data_dir=$GLUE_DIR/MRPC –vocab_file=$BERT_BASE_DIR/vocab.txt –bert_config_file=$BERT_BASE_DIR/bert_config.json

–init_checkpoint=$BERT_BASE_DIR/bert_model.ckpt –max_seq_length=128 –train_batch_size=32 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=/tmp/mrpc_output/

修改之后的

python run_classifier.py –task_name=MRPC –do_train=true –do_eval=true –data_dir=D:/code/BERT-open/GLUE/glue_data\MRPC –vocab_file=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/vocab.txt –bert_config_file=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/bert_config.json –init_checkpoint=D:/code/BERT-open/GLUE/BERT_BASE_DIR/chinese_L-12_H-768_A-12/bert_model.ckpt –max_seq_length=128 –train_batch_size=2 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=D:/code/BERT-open/GLUE/output2

–do_predict=True –data_dir=NERdata –vocab_file=checkpoint/vocab.txt –bert_config_file=checkpoint/bert_config.json –init_checkpoint=checkpoint/bert_model.ckpt –max_seq_length=128 –train_batch_size=32 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=./output/result_dir/

完成了bert fake news detection代码中的run_classifier和

3.云中运行代码(Geogle-colab)

在查阅了一些CSDN和stackflow的一些资料以后,我了解到这个OMM内存溢出,需要修改batch-size,遂采取使用geogle-colab这个免费的云平台。

(1条消息) 使用Google免费GPU进行BERT模型fine-tuning_LeoWood 的博客-CSDN博客

如果TensorFlow版本为2.xx则可以参考这篇博客当中提出的使用方法,但是无奈的是我的代码需要的环境可以说是与这个colab基本上无缘了,因为其geogle云硬盘官网已经是弃用了TensorFlow1.xx,以前的话是可以进行TensorFlow版本的切换的,现在看来是不行的。

4.云中运行代码(矩池云GPU)

后来用到的就是我们的矩阵云这个云 GPU加速器平台。

我租用的是一块NVIDIA RTX A2000 12GB显存 30GB内存的显卡。

(1条消息) Pycharm连接矩池云平台租的GPU训练神经网络_ZFour_X的博客-CSDN博客_pycharm远程链接矩池云

(1条消息) Xshell连接矩池云进行BERT-BiLSTM-CRF模型训练_ZFour_X的博客-CSDN博客

在鱼肚我在完成了基本的pycharm对于基本的shell云端部署以后,就开始进行训练了。

矩池云 - 专注于人工智能领域的云服务商 (matpool.com)

对于Bert-base模型的MRPC模型训练过程中,我全程训练使用的主要是这个CPU,GPU基本上没有怎么满载运行,可能只用了其中的1%不到。

在下面的过程中我还踩了一次坑,主要就是在训练了很久以后竟然突然报错了,然后我仔细检查了一下,原来是这个bert/uncased_L-24_H-1024_A-16被我换成了bert/Chinese_L-24_H-1024_A-16这样的话,基本是训练的一个错误的匹配,因为其训练过程中,是要

!python run_classifier.py –task_name=MRPC –do_train=true –do_eval=true –data_dir=data –vocab_file=gs://cloud-tpu-checkpoints/bert/uncased_L-24_H-1024_A-16/vocab.txt –bert_config_file=gs://cloud-tpu-checkpoints/bert/uncased_L-24_H-1024_A-16/bert_config.json –init_checkpoint=gs://cloud-tpu-checkpoints/bert/uncased_L-24_H-1024_A-16/bert_model.ckpt –max_seq_length=128 –train_batch_size=4 –learning_rate=2e-5 –num_train_epochs=3.0 –output_dir=output

报错参考这个:(1条消息) tensorflow.python.framework.errors_impl.DataLossError:_AI视觉网奇的博客-CSDN博客

内容大致是:tensorflow.python.framework.errors_impl.DataLossError: not an sstable(bad magic number)

这个错误是因为–input_checkpoint的名字搞错了,后面的.data-00000-of-00001不应该写。

tf1.13版本以后,加载预训练,需要index,模型权重和checkpoint,文件,缺少则会报错。

tf版加载模型报错:

https://github.com/YadiraF/PRNet

PRNet是Joint 3D Face Reconstruction and Dense Alignment with Position Map Regression Network中提出的3D人脸重建算法模型,文章发表于ECCV2018,利用神经网络直接预测了3D landmark,取得了不错的效果。

TensorFlow1.15 报错:

ValueError: The passed save_path is not a valid checkpoint: Data\net-data\256_256_resfcn256_weight

经过测试,发现如果模型文件不存在,也会报这个错。

开源网络提供的预训练缺少模型的index文件。

找到补上就可以了。

在我们进行训练命令当中不用加上这个后缀.data-00000-of-00001。但是这个文件不能删除。

关于我在矩阵云中训练代码的命令如下。batch-size设置为32即可。

python run_classifier.py –task_name=MRPC –do_train=true –do_eval=true –do_predict –data_dir=/mnt/PyCharm_Project_1/GLUE/glue_data/MRPC –vocab_file=/mnt/PyCharm_Project_1/GLUE/BERT_BASE_DIR/uncased_L-12_H-768_A-12/vocab.txt –bert_config_file=/mnt/PyCharm_Project_1/GLUE/BERT_BASE_DIR/uncased_L-12_H-768_A-12/bert_config.json –init_checkpoint=/mnt/PyCharm_Project_1/GLUE/BERT_BASE_DIR/uncased_L-12_H-768_A-12/bert_model.ckpt –max_seq_length=128 –train_batch_size=32 –learning_rate=2e-5 –num_train_epochs=1.0 –output_dir=/mnt/PyCharm_Project_1/GLUE/output2

我们参考bert的github源码中的一段话来查看我们的MRPC的训练效果吧。

Sentence (and sentence-pair) classification tasks

Before running this example you must download the GLUE data by running this script and unpack it to some directory $GLUE_DIR. Next, download the BERT-Base checkpoint and unzip it to some directory $BERT_BASE_DIR.

This example code fine-tunes BERT-Base on the Microsoft Research Paraphrase Corpus (MRPC) corpus, which only contains 3,600 examples and can fine-tune in a few minutes on most GPUs.

译文:在运行此示例之前,您必须下载GLUE数据通过运行此脚本并将其解压缩到某个目录“$GLUE_DIR”。接下来,下载“BERT Base”检查点并将其解压缩到某个目录“$BERT_Base_DIR”。

此示例代码在Microsoft Research Paraphrase语料库(MRPC)上对“BERT Base”进行微调,该语料库仅包含3600个示例,在大多数GPU上可以在几分钟内进行微调。

1 | export BERT_BASE_DIR=/path/to/bert/uncased_L-12_H-768_A-12 |

You should see output like this:

1 | ***** Eval results ***** |

This means that the Dev set accuracy was 84.55%. Small sets like MRPC have a high variance in the Dev set accuracy, even when starting from the same pre-training checkpoint. If you re-run multiple times (making sure to point to different output_dir), you should see results between 84% and 88%.

A few other pre-trained models are implemented off-the-shelf in run_classifier.py, so it should be straightforward to follow those examples to use BERT for any single-sentence or sentence-pair classification task.

Note: You might see a message Running train on CPU. This really just means that it’s running on something other than a Cloud TPU, which includes a GPU.

译文:这意味着Dev集合的准确率为84.55%。像MRPC这样的小集合在Dev集合准确率上具有很高的差异,即使从同一个训练前检查点开始也是如此。如果您多次运行(请确保指向不同的“output_dir”),结果应该在84%到88%之间。

其他一些预先训练的模型在“run_classifier.py”中已现成实现,因此,遵循这些示例将BERT用于任何单个句子或句子对分类任务应该很简单。

注意:您可能会看到消息“正在CPU上运行训练”。这真的意味着它运行的不是云TPU,而是包括GPU。

而我的模型在云中历经差不多1小时多一点时间的训练以后,其效果如下图所示。

其中显而易见的是训练的准确率为81.37%这个比官方说明的这个结果应该在84%到88%之间,仅仅低了2.7%左右,这个可能还是有一些原因的。